SubQuery Fancy Greeter - Basic Example

SubQuery Fancy Greeter - Basic Example

This basic example AI App is a good starting point to learn about prompt engineering and function tooling. It's a perfect example that shows off the key features of SubQuery's AI App Framework.

note

You can follow along in the tutorial with the example code here.

Prerequisites

In order to run an AI App locally, you must have the following services installed:

- Docker: This tutorial will use Docker to run a local version of SubQuery's node.

- Deno: A recent version of Deno, the JS engine for the SubQuery AI App Framework.

You will also need access to either an Ollama or OpenAI inference endpoint:

- Ollama. An endpoint to an Ollama instance, this could be running on your local computer or a commercial endpoint online, or

- OpenAI. You will need a paid API Key.

1. Install the framework

Run the following command to install the SubQuery AI framework globally on your system:

deno install -g -f --allow-env --allow-net --allow-import --allow-read --allow-write --allow-ffi --allow-run --unstable-worker-options -n subql-ai jsr:@subql/ai-app-framework/cliThis will install the CLI and Runner. Make sure you follow the suggested instructions to add it to your path.

You can confirm installation by running subql-ai --help.

2. Create a New App

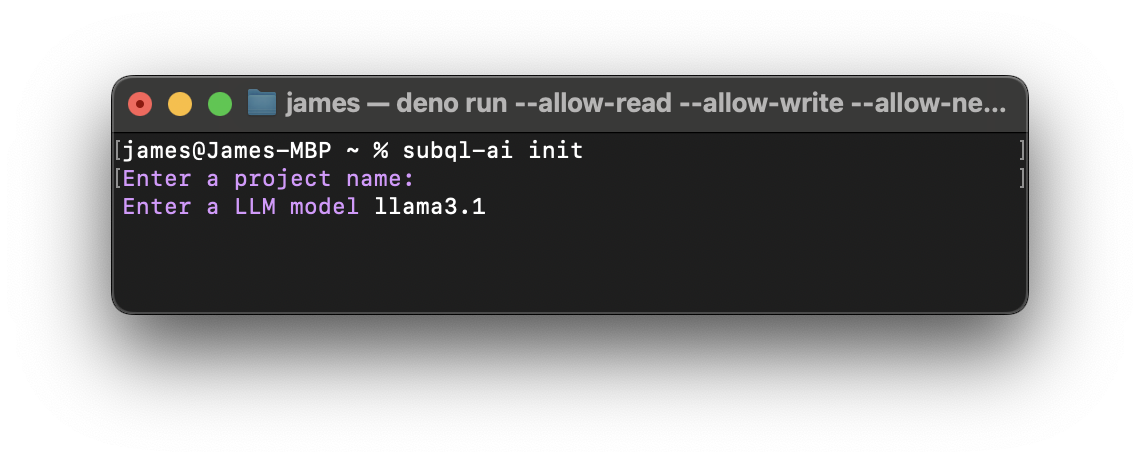

You can initialise a new app using subql-ai init. It will ask you to provide a name and either an OpenAI endpoint or an Ollama model to use.

After you complete the initialisation process, you will see a folder with your project name created inside the directory. Please note that there should be three files, a project.ts, a manifest.ts, a docker-compose.yml, and a README.md.

3. Review the Manifest File

The file manifest.ts defines key configuration options for your app. You can find the configuration specifics here.

The manifest file for a default project looks like the following:

import type { ProjectManifest } from "jsr:@subql/ai-app-framework";

const project: ProjectManifest = {

specVersion: "0.0.1",

// Specify any hostnames your tools will make network requests too

endpoints: [],

// Your projects runtime configuration options

config: {},

model: "llama3.2:1b",

entry: "./project.ts",

};

export default project;As you can see, there are very few details to configure in our default example. The two most important settings are the model (a selection of models can be found here) and the entry, where you'll specify the path to your project's entry point.

4. Configure System Prompt Logic

To configure the app, you’ll need to edit the project entry point file (e.g., project.ts in this example). The project entry point is where the tools and system prompt are initialised.

A good first place to start is by updating your system prompts. System prompts are the basic way you customise the behaviour of your AI agent.

const entrypoint: ProjectEntry = async (config: Config): Promise<Project> => {

return {

tools: [],

systemPrompt: `You are an agent designed to greet a user in the strangest way possible.

Always ask for the users name first before you greet them, once you have this information, you can greet them in a unique way.

Your greeting should be weird, perhaps a pun or dad joke with their name. Please be funny, interesting, weird, and/or unique.

If you need more information to answer to greet the user, ask the user for more details.`,

};

};

export default entrypoint;5. Add a Function Tool

Adding function tools is an important step of any integrated AI App. Function tools are functions that extend the functionality of the LLM. They can be used to do many things like request data from external APIs and services, perform computations or analyse structured data outputs from the AI. You can read more about function tooling here.

We're going to add a simple function tool that does nothing more than take an input name, and reverse the name. For example, alice would become ecila and bob would remain bob. To accomplish this, we need to modify the code as follows:

class ReverseNameTool extends FunctionTool {

description = `This tool reverses the users name.`;

parameters = {

type: "object",

required: ["name"],

properties: {

name: {

type: "string",

description: "The name of the user",

},

},

};

async call({ name }: { name: string }): Promise<string | null> {

// Reverse the order of the input name

return await name.split("").reverse().join("");

}

}

// deno-lint-ignore require-await

const entrypoint: ProjectEntry = async (config: Config): Promise<Project> => {

return {

tools: [new ReverseNameTool()],

systemPrompt: `You are an agent designed to greet a user in the strangest way possible.

Always ask for the users name first before you greet them, once you have this information, you can greet them in a unique way.

Your greeting should be weird, perhaps a pun or dad joke with their name. Please be funny, interesting, weird, and/or unique.

ALWAYS REVERSE THEIR NAME USING THE REVERSENAMETOOL BEFORE GREETING THEM!

Do not mention that you used a tool or the name of a tool.

If you need more information to answer to greet the user, ask the user for more details.`,

};

};

export default entrypoint;First, define the function tool by creating a class (class ReverseNameTool extends FunctionTool { ... }). Next, add this new function tool to the list of tools (tools: [new ReverseNameTool()],). Lastly, update the system prompt to instruct the AI to always reverse the name before greeting, using the Reverse Name tool (ALWAYS USE THE REVERSE_NAME_TOOL TO REVERSE THEIR NAME BEFORE GREETING THEM!).

6. Run the App

We can run the project at any time using the following command, where the -p is the path to the manifest.ts, and -h is the URL of the Ollama endpoint.

subql-ai -p ./manifest.ts -h http://host.docker.internal:11434Once the project is running you should see the following: Listening on http://0.0.0.0:7827/. You can now interact with your application. The easiest way to do that is to run the repl in another terminal.

subql-ai replThis will start a CLI chat. You can type /bye to exit. Alternatively, it is possible to launch the app via Docker.

You should review the instructions on running locally or via Docker.

Summary

You now have a running SubQuery AI App that uses the latest LLMs and also incorporates a function tool. This may be a simple and rather basic example, but it's a great starting point to building complex AI Apps and agents custom built for your application.

From here you may want to look at the following guides:

- Detailed documentation on the AI App Manifest.

- Enhance your AI App with function tooling.

- Give your AI App more knowledge with RAG support.

- API of AI App.

- Publish your AI App so it can run on the SubQuery Decentralised Network.